Why is “AI” suddenly everywhere?

In 2021, we saw the beginning of the AI explosion when OpenAI released ChatGPT, a Generative Pre-Trained model.

This was not their first text-generating system. The GPT framework had been available to anyone who signed up for the OpenAI “playground,” a testbed for developers to build things with their services. Some people had used this to make a basic website where you could input any text and have the AI autocomplete it. This was a lot of fun to mess with. It would only vaguely get the vibe right, but it was easy to make it do what you wanted, even though it wasn’t actually good at doing it. It was good at picking up on different types of context.

This was far from the first thing OpenAI had done. For years before this they had been working on other AI-solvable problems. One of their most insightful experiments involved teaching AI agents to play hide & seek with each other. The hiders would learn to hide better, the seekers would learn to seek better, and the whole thing became an AI arms race to find the next workable exploit to ensure a win.

OpenAI's legacy

What made ChatGPT special

ChatGPT was not a sudden invention from whole-cloth. It was the result of applying a new type of alignment to the existing GPT technology.

GPT is “trained” on innumerable pieces of English text. The training meant putting the example text into the model and letting the computer examine the text and adjust a series of probabilities, or “weights,” about which words come after which, given what came before in the training data.

For example, every time the model was given training text that included the word “example” coming after the word “for,” at the beginning of a sentence, it learned that when you start a new sentence with the word “For,” there’s a 90-something percent chance that the next word will be “example.” This is the process that it performs over and over again very fast for every bit of generated text that it puts out.

What they did differently with ChatGPT was, instead of having it simply continue writing at the end of the user’s input, they adjusted the weights to make it mimic a conversation. The model would now prioritize simulating a response to a message, rather than simply finishing the message.

Right now, one of the biggest questions in computer science, is whether this approach can be scaled up to make increasingly smarter artificial minds. Some companies are betting big on this, and making AI models that are larger and larger. But there’s a debate about whether the quantity of training data and amount of simulated neurons is enough, or whether the actual quality of data is more important.

But this doesn’t explain why “AI” has become such a common buzzword. To explain that, one needs to know the story of ChatGPT’s user growth.

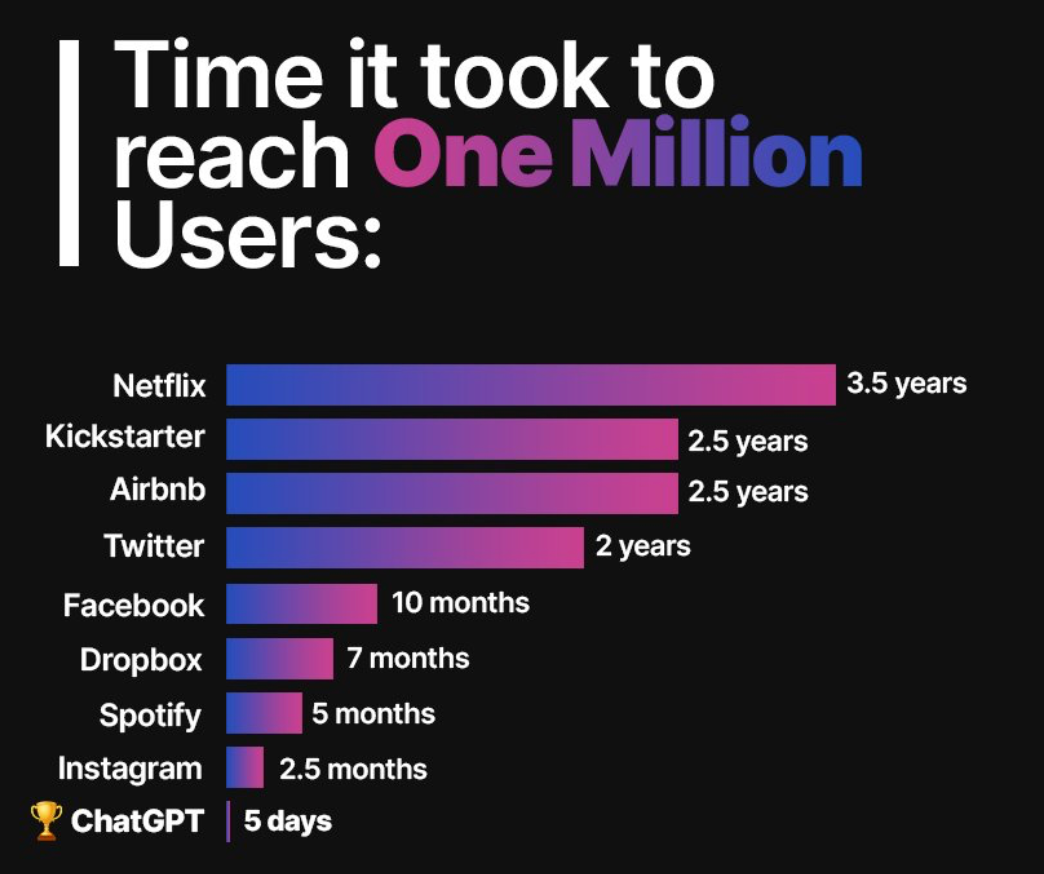

ChatGPT reached a million users very quickly

The growth of ChatGPT’s user base was astronomically fast. It was the immediate “next big thing” when it became available. People were talking about the technological singularity being here. It was such a huge leap in what people could commonly see was publicly available. It wasn’t just that the technology was available to use, but how easily people could see that others were using it. This viral spread combined with the technology’s accessibility and widespread (if uncertain) use cases ensured that everyone on the internet at the time could not escape its presence. And then, when they introduced their paid tier to always have access to the service which would regularly reach capacity, the value proposition was clear, and many people agreed to it. Everyone who signed up for a ChatGPT Pro subscription was signaling that an intelligent system that could answer natural-language questions and hold a conversation was something people were willing to pay $20/month for.

This is why the “AI” trend became a craze, which spawned a gold-rush level surge of “AI chatbots” to flood onto the scene. This is why every developer and their dog is now working on either a ChatGPT clone, a ChatGPT wrapper app that adds no value, or a ChatGPT plugin. Or building an “AI assistant” into their app that just uses GPT to summarize things or “write for you.” There’s nothing special about 95% of these apps. Some, like Elephas, are run by genuinely dedicated developers who want to push the medium forward. But for every Elephas there are at least 99 $99/month “instant chat friend” scam shovelware apps.

In addition to the explosion of AI themed grifts, there was also a small explosion of other actual similar advancements in language modeling. Other companies trying to achieve the same thing from the ground up, without attaching themselves to OpenAI’s specific models. There have been multiple competing large language models to try to rival GPT’s dominance.

Comparing different AI models is hard

Anthropic’s Claude 3 models, for example, came out a few weeks ago and are said to surpass GPT 4 in key areas like logical reasoning and context length. But it’s very difficult to compare the models and find out which one is smarter. For one thing, their outputs are not the same every time. You can ask both models the same question ten times and get a slightly different answer every time. And there’s also the fact that when you re-generate a response, the system accounts for the fact that you’re re-generating it, and assumes that there was something wrong or not ideal about the last response.

This all leads to a weird bidirectional rat-race where some are desperate to either distinguish their technology as being just as good as ChatGPT or better. While others are trying to latch onto GPT as a way to ride on their coattails, making an app that can meaningfully build on top of the GPT framework.

So to recap: you have OpenAI, Anthropic and Mistral who I consider the leaders. Just behind them, you have the people building on top of those things. Making apps that do things like record & summarize meetings, chat with a bot that’s an expert on a document you gave it, or cute little desk toys that you can talk to for fun. Then a ways back, you have the grifters who make apps that don’t really do anything besides act like a middleman for the AI. They charge you extra, and pretend like you couldn’t get the same thing for cheaper by going to the same source on your own. And that, the last category, makes up at least 70% of what comes up if you dare to search “AI” in the App Store right now.

My recommendations

Perplexity

If you want to use an LLM for research, writing help, or any of the other edge use cases you like, the easiest catch-all recommendation I think I can make is Perplexity. It has both free and paid tiers, and the functionality you get for free is very compelling.

Their pro subscription gives you access to multiple models, which means you can compare them yourself and decide which one suits your needs the best, instead of having to pay for each individual model to compare them.

Perplexity’s strength as a research aid comes from the way it integrates web search directly into its responses. Think of Perplexity like your expert Googler. It can do multiple searches and look at all the results in a few seconds, and gives you a summary of its findings to answer your question. There’s also a “focus” feature which lets you narrow down the search results to a category like academic papers only, reddit, YouTube, or disable the research function entirely, and rely solely on the LLM’s knowledge. This is called the “writing” focus mode because it aligns better for writing an original work based on the model’s own built-in knowledge, instead of deferring to the contents of search results.

Perplexity is a great all-around research aid and writing assistant, but there are some reasons to use OpenAI’s own last-mile delivery.

ChatGPT

I won’t waste time talking about the general purpose uses of the general purpose ChatGPT because the platform has grown to include a host of “custom GPTs” that “anyone” can make. Read, the best, most compelling ones on the store will be the ones made by some website owners who integrate the service directly into their site. But you can also make your own custom GPT to align with your specific needs.

There is a “create a new GPT” screen, which gives you two chat windows. On the left is your chat with the “GPT maker” bot, and on the right is your place to test and interact with your freshly-being-made GPT. I’m getting tired of seeing those three letters.

You have a conversation with this chatbot about what kind of chatbot you want to make. It asks you a few questions about how you want your custom bot to behave and respond. It asks if there are specific interactions you want it to steer towards or avoid. Not only that, but it asks if there is a particular dialect you want it to use.

This might spur your imagination of a pirate themed math teacher bot, or other quirky combinations of traits, and these are all doable. It’s possible to train nuanced personalities and specific workflows into these bots. You can also provide them with information they will always use in their responses.

It’s at the stage right now where it takes a bit of creativity to figure out how to get the most out of it, and that’s why I find it exciting and fun. As for how useful it is, that fluctuates depending on when I have a task that I can easily and safely delegate to an AI.

There is also a “memory” feature in the works, which lets ChatGPT create memories about things you tell it to remember; it can refer to things it remembers to influence and inform its responses. This feature is still in beta and as a tester of it I can confirm that it still has several kinks to be ironed out. Big ones. More like oversights than kinks. But the kind that shouldn’t be terribly challenging to fix.

Claude

In terms of the models themselves, my favorite is Claude. It is by far the most intelligent LLM I have spoken with. Its website is a bit lacking, there's no mobile app or native voice input or output, but the model is available through Perplexity.

Of the ones I've tried at time of writing, Claude displays the most "presence of mind" in my "testing." GPT 4 is no slouch and is not dumb, but Claude is just better at picking up on subtle patterns and engaging with nuance, rather than simply referring to it. Meaning, sometimes ChatGPT will just say something is very complex and involves a lot of things, and give a brief hand-waving overview. Whereas Claude would be more willing to dive into the nitty gritty.

Wrapping up

This is a current ongoing development that’s changing rapidly (but maybe not as rapidly as some people would have you believe, i.e., YouTube clickbait artists). So if you want to stay up-to-date on what I, personally, consider to be the most noteworthy developments in AI, tech in general, and other things tech and art related, make sure you’re subscribed to the free email newsletter :), and thank you for reading