What Rabbit is working on now

Following the official start of the Rabbit R1 finding its way to the first pre-order customers, the startup of just 17 individuals is doing their best to keep up with the unexpected hype and demand around their first product. They’ve taken a substantial risk, and right now it seems they’re in the gap between the two trapezes, as some are finding the r1 rather disappointing at launch.

Arriving in what one tech reviewer described as “an un-reviewable state,” the r1 has struck many users as unfinished. The functionality out of the box on day one was quite limited. Specifically advertised functions like ordering DoorDash and Uber are just barely functional. The device currently serves primarily as a voice interface for Perplexity. And while that in and of itself is arguably worth the low asking price of the device, the fact remains that a whole lot more functionality was promised and implied to exist on or before day one.

Based on what i’ve been able to learn, the device did launch with unfinished software, but that doesn’t need to be a problem for very long. They have the core of it working, and just still need to build it out.

The Rabbit Hole Web Portal

The web portal is supposed to be how your rabbit is able to “perform tasks on your behalf.” it has to be able to be signed in as you and have an authorized session open for you on your behalf, so there has to be a way for you to give authentic human access to the system, which means you have to sign in on a website where you link your r1 to your account and then link your account to your services.

The original claim was more like, you can sign into almost anything through here, and use services such as Uber and DoorDash. Implying that you sign into the app on the web through their web portal, and the LAM interacts with it for you.

The way it works right now, you seem to only be able to sign into a few apps, namely, Uber and DoorDash, and Spotify. The other app he specifically mentioned and demoed on day one. So twitter and reddit are saying all the LAM stuff is fake and it’s just using APIs to interface directly with the apps, like Siri integration. So reddit figures this is just a $200 Siri, and we all know how much everyone loves Siri.

My charitable guess is they wanted to get the hardware into people’s hands first, because it would make testing at scale easier and cheaper / make back some of that r&d. It’s only been out for about a week and they’re only 17 people.

Pessimistically, they were lying and they can’t do what they said they can. It’s Elizabeth Holmes all over again. Realistically, they were banking on being able to make it work by a certain date and it may or may not still come through. Optimistically, it is mostly working, they just have to work out a few kinks, or, they have a plan and it’s just taking longer than anticipated because real life etc, but good art gets delayed all the time.

Assuming it all works out, there are a few other things they have announced that they will move onto.

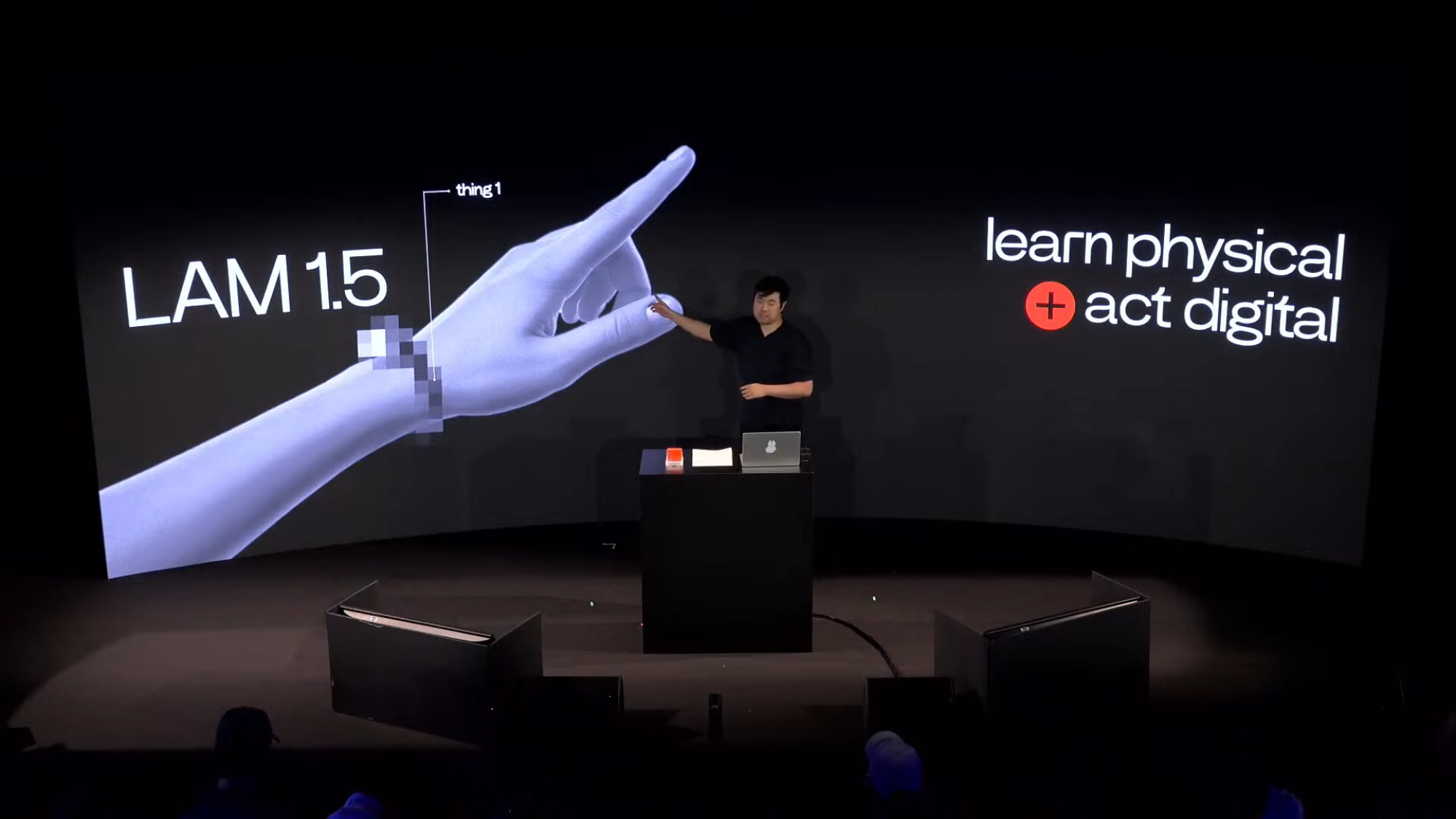

”Thing 1”

Cheekily teased by Jesse briefly towards the end of the keynote, he showed a picture of a person’s hand and wrist, wearing a thin device like a watch, but it’s heavily pixelated. You can tell that’s what it is, but it’s too pixelated to see what it looks like. The hand is pointing.

While this image was up, Jesse started talking about “LAM 1.5” and how you would want to have the ability to just point to things in real life and ask questions about them. So they’re working on something like that. A wrist-worn AI camera vision assistant.

a desktop operating system

They want you to be able to run their large action model on your own computer. This means an operating system you interact with by speaking with it, and presumably, get most computer based tasks done by asking. This could be a huge deal. This would be a huge step towards OS One, the software powering Samantha from the movie Her. And I’m not talking about the whole romance thing, although maybe that too someday soon, but specifically the actual computer stuff she does.

Remember the very first interaction with Samantha. She helps Ted go through and organize his messy email inbox. She asks for his permission, then scans his folders, and immediately they start having a back and fourth conversation about what’s in there, what he wants to keep, what can be thrown out, and he mentions that he thought some of his writings were funny. She laughs and says something like “Oh yeah I see those, they are funny here i’ll set them aside.” That is my favorite example, from that movie, of a piece of technology we are very close to actually having.

Generative UI

First, what UI is.

The UI, or User Interface, has always been the key design challenge in making computers easier to use. How do you give the user access to everything the computer can do without overwhelming them?

The earliest computers wasted no time on this question. Before the first Graphical User Interface (GUI) it was nothing but commands and arguments for you. You were lucky if you didn’t have to deal with punch cards. The computer itself was a mysterious monolithic thing you could get something out of if you knew how.

Gradually, computers became smaller and smaller which meant they were more powerful per square inch which meant eventually you could comfortably display one on your home office desk. But they took longer to get friendlier.

Eventually, someone had the idea to use the screen to display a series of buttons that the user could select by moving a thing around on the desk with their hand and pressing a button on it with their finger. We do this so often now that it seems like a totally natural commonplace thing. But I mean really think about it. That idea when it first came about was absolutely world-shaking. Nothing like it had ever really existed, but it closely mimics how real life works. Using the mouse mimics moving your hand around, flexing your fingers to touch and grab things. Windows mimic pieces of paper on a desk.

It was intuitive, but not. You still had to learn how to use it. Granted the amount you had to learn was less, but not zero. A lot of people use computers in our daily life and we tend to think it’s actually as natural and intuitive to everyone, as it feels to us. But it’s really not.

Now, what does “generative UI” mean?

Generative is the term that applies to the current AI boom. Generative AI is the proper term. ChatGPT is a Generative Pre-trained model. Basically it means AI used to “generate” things like images and text from a given input or “prompt.”

So what Generative UI would mean is, having a system that changes the buttons and content of the screen, based on a series of inputs. It would mean designing a system that can understand what you’re looking for and put it in front of you. If you’ve ever played with any of the image generating AIs, it would be similar to that. It would adjust itself to the user’s needs.

Wrap up

Rabbit’s mission is “to create a computer so intuitive, you don’t need to learn how to use it.”

In 2007, Apple told the world that they made a smartphone that was easier to use. Similarly to the Macintosh in 1984. A desktop computer for businesspeople that was easier to use. Rabbit wants to break free from this form of human computer interaction, where you still have to learn how to use the computer. Naturally that’s going to mean starting with something really simple and branching out to more different things.

Right now though, to remain as unbiased as possible it’s important to acknowledge that some expectations were broken. But the wave of negativity online has been overblown. And I get it. People are wary of lofty technology claims after Elizabeth Holmes and Elon Musk. But just be patient, let him cook, watch and see what happens.

My take

Personally, I believe in this project and its vision, I feel in my heart that a lot of the online criticism misses the point. A lot of people failing to understand why this couldn’t be just another smartphone app are openly missing the point of this thing. The goal is to get away from apps. To get a way from the need to use an app, and instead to focus on tasks that need to be done.

I hate having to remember the stupid brand names of all the individual apps that i have to jump between to do stuff. I could create a system of Siri shortcuts but then i’d just be moving the problem down a peg by changing the list of commands i have to memorize, and remember which one is which. I still can’t seem to get my HomePod Siri to understand that when i say “the lights,” i mean my specific bedroom lights, every single time, so please stop asking “which one?”

I believe in their stated approach, and I think it’s totally possible that they can pull it off. And, sorry Jesse, but if they fail, another company will copy the basic idea. This user interface paradigm IS the future of how we ”do things on computers.” How we ”get things done.” Just ask the computer to do it and it does it.