Apple Vision Pro initial launch review

The Apple Vision Pro has been Apple’s most fascinating product launch that I’ve experienced firsthand. Fascinating because it is difficult to fully describe the experience in a way that will really get it across to the listener. It’s harder to explain and understand the experience of the vision pro than it is to just put on and use. A bit like trying to explain colors to a blind person. You can say something that feels to you like you’ve described something meaningful, but it’s only meaningful to people who can already see color. With that being said, I’m still going to try and explain the experience of using it, from a few different directions.

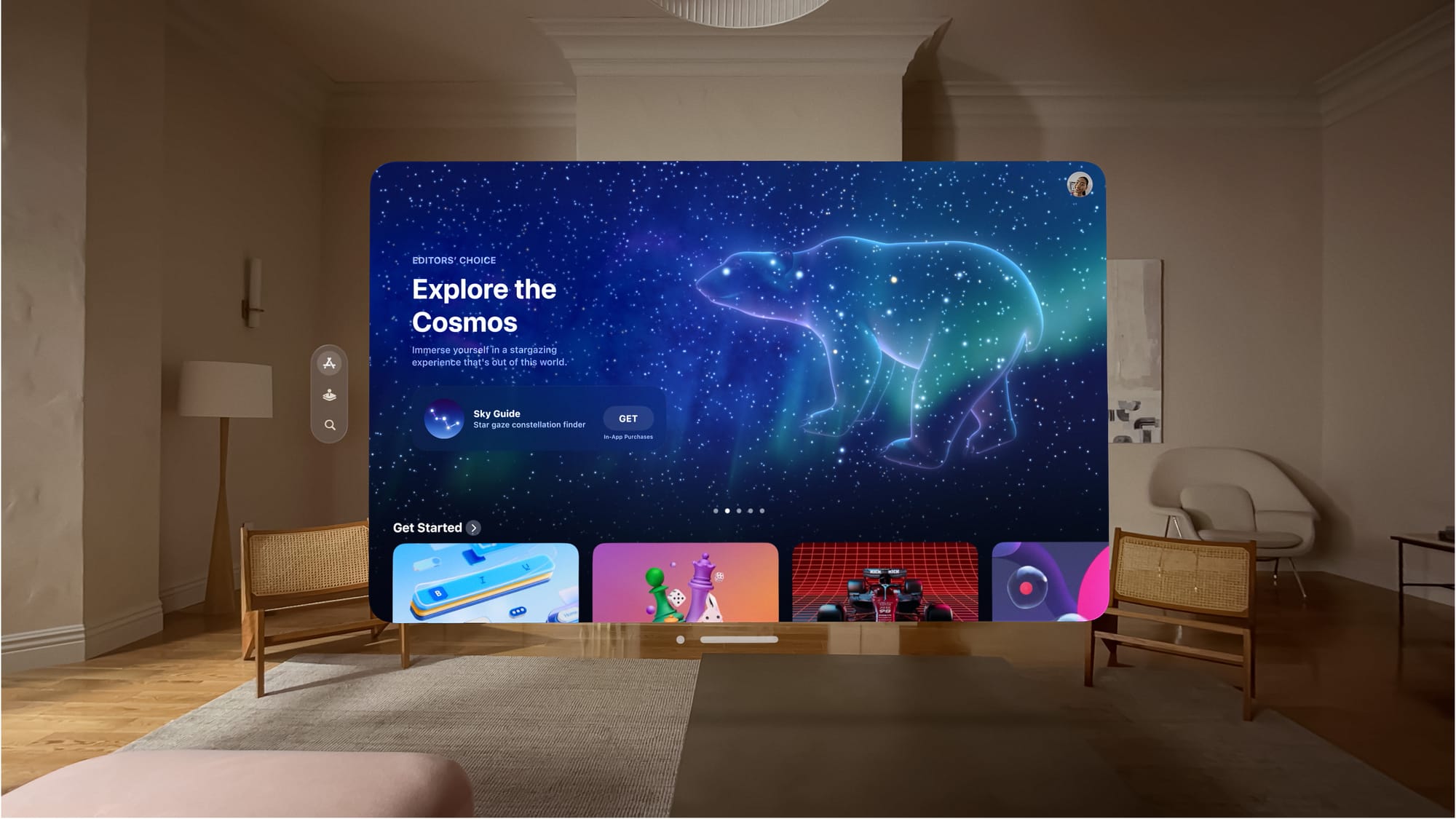

I have a window floating in front of me that I can move anywhere I want in the room; straight ahead, above or below my eye-line, to the side, close up or far away. And I can have multiple windows all around me like this, each one positioned exactly where I want it. I “tap” on things by tapping my finger and thumb together while looking at what i want to select. Moving windows & objects is done by looking at the bar below them, pinching and moving my hand the way i want the window to move. Like moving a real object.

This “look & tap” is the default way you click on things in vision pro, which means it’s how you do basically everything. Now in the amount of time it took you to read and comprehend that, I could have opened & closed several windows & menus, navigated through web forms or swiped through pictures without thinking about any of those things i just said. It just happens. The same way you don’t think about holding your phone and tapping the screen. It feels physically different, but psychologically very familiar. Some have likened it to the iPad experience and I would agree with that.

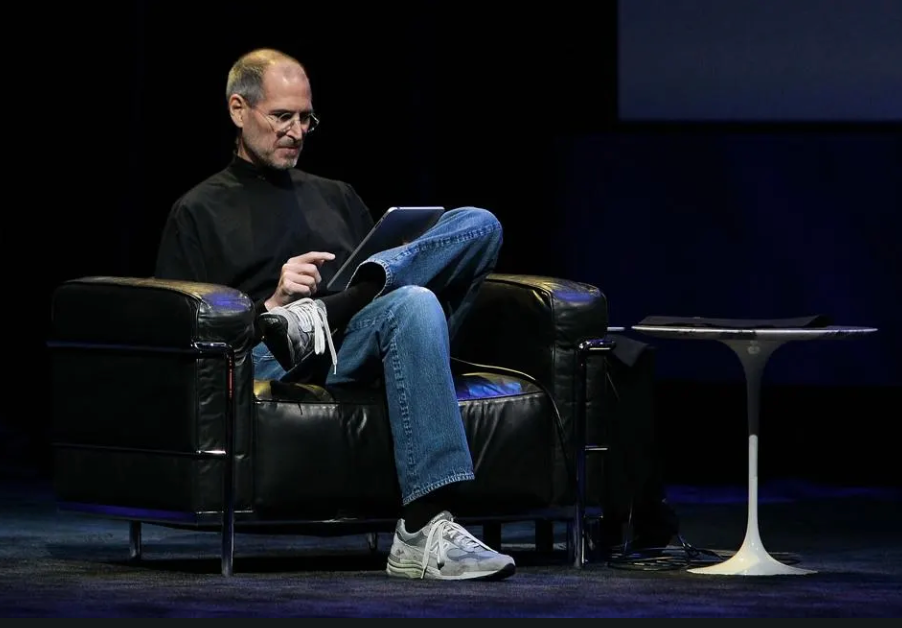

The iPad’s biggest achievement wasn’t in how much it let you do, but how you could do them; like sitting back in a comfy chair, holding the website you’re reading in your hand, like a magazine. This was the “killer” use case that Steve Jobs wanted everyone to know was the “whole point” of the iPad. Not that you could sit at your desk and use a less-powerful computer to do less things, but that you can bring some of the computing tasks down to earth with you on your couch.

When the original iPhone came on the scene, it was very different from the universal glass brick we know today. The original iPhone didn’t have copy & paste, couldn’t set home screen background images, and had to be plugged into a computer for initial setup. Think about those three particular features. Oh yeah, and y’know how everyone’s always complaining that you can’t put iPhone icons “anywhere you want,” because they stack to the top left? Well, would you rather not being able to move your icons AT ALL? Because that’s what it was like on version one.

The original iPhone couldn’t even do something so simple as copy and paste text. And it still sold over a million units in its first year. And it’s a very real question: If the iPhone was so limited and unfinished, Why was it So Successful??

It was the experience of using it. It’s not just that it was so different from what came before it, but different in meaningfully better ways, that no other company before them, had bothered to look into. And the thing that encapsulated all that, was the scrolling.

When Steve Jobs unveiled the iPhone to the world, the biggest reaction wasn’t for the fact that it was an iPod again, or the actual multi-touch screen technology. The big reaction came when he just took his finger, and he scrolled. It was the first time that a digital interface had been made to mimic real physics in such a natural & intuitive way. It made people look at their blackberries and wonder why they didn’t work the same way already.

Steve Balmer the CEO of Microsoft was very outspoken at the time, being confident that the iPhone “doesn’t appeal to business customers because it doesn’t have a keyboard.” He ended up being wrong about that, because the iPhone’s software keyboard surprised everyone by being actually good enough to use.

So the iPhone was ridiculed at first for being more expensive and apparently less useful, but over time features were added after the launch that shaped the iPhone into what it is today. It has transformed so much that people barely remember the half-baked initial release anymore.

There are a lot of similarities between iPhone OS 1.0 and visionOS 1.0 and 1.1. The inability to rearrange home screen icons, the relatively lackluster system menus & customization settings, the quirks of the system.

Apple are using the initial launch to nail the basics. The room tracking, eye & hand tracking, voice input, spatial audio, audio raytracing, and the details about blending environments (the fog effect, how further away objects fade out faster, etc.) they had to get all that right before moving onto building on top of that foundation.

This is why I feel like the Vision Pro discourse online has largely missed the mark: people are confusing what the vision pro is now, with what it will always be. Confusing the beginning for the middle. People expect the first version of every apple product to jump straight to the standard being set by apple’s other current products. But apple doesn’t work this way, and that’s part of why they’re so successful. The fact that they don’t bother trying to make something exactly how people will expect it to be right away, and instead focus on getting the basics right at first. Because they understand, that everything you build on top of something else, depends on that thing actually working. They understand the importance of a good foundation.

Here’s another fun fact about the iPhone and its development. It was very rushed. It took about two years in total. And everyone who was working on it was terrified and stressed beyond belief. It was a huge pain in the ass and it was a thousand impossible problems. It’s true that they were building on things others had made, but they only had what they had to work with. The multitouch technology was basically the most important thing, and it was actually an acquisition from another company, not something they invented themselves.

Ok enough of the rambling about historical precedent. Why do I use the Vision Pro, why do I not use it, what is it good for, what do I not feel the need to put it on for, and where do I see it in my life?

First, the most obvious use case(s) that apple are pushing right now, are the “personal movie theater” experience, the “surrounded by windows, deep in a flow of work” experience, and the third is the general VR pitch but in better graphics which does make a difference here. The big push for VR as “the next big thing” was largely about transporting you to alternate realities, locations, experiences etc. Which seems like a natural progression from what we currently do with realtime computer graphics, i.e. gaming. Video games are capable of providing a huge range of experiences. It only seems natural that the same type of creativity that goes into making narrative games like Gone Home, or Firewatch, which offer emotionally enriching artistic experiences, could go into making a genuinely memorable augmented-reality experience. Like an installation art-piece or a great piece of architecture.

But all of that, as lofty-sounding as it may be, is still genuinely only the beginning of what this technology can offer. And Apple knows this. That’s why two of the environments are still labeled, “coming soon.” They want you to know this is the beginning. Their, “First,” spatial computer. Because as the saying goes, this is the worst this technology will ever be.

As it stands right now, you get back what you put into it. If you want a lot out of it, you might need to spend some time at an apple store, trying on light seals until you find the right one. You might have to redo the eye tracking setup multiple times if you can’t get it to work properly on the first try. It doesn’t work well in cars, but it does have a “travel mode” for airplanes. The battery lasts for two hours, which doesn’t sound like long because it’s not, but once you start using it you might find it difficult to use for that long without a break. And if you wear glasses, well, there’s a special pair of “optical inserts” you have to buy, because the system isn’t designed to work with glasses. And they don’t support all prescriptions, only “most” of them. So there’s a chance you can’t use this thing nearly at all depending on your vision. But there’s also VoiceOver, apple’s built-in accessibility tool for fully blind people.

Now why would they bother including an accessibility feature, specifically targeted for fully blind individuals, on a product specifically designed with the core purpose of accurately catering to eyesight? Because Apple Vision Pro is not just about vision. It’s about information. It’s about people. It’s about the way people interact with information, and the way information exists in our lives. The way we orient ourselves to media. The way we conceptualize digital things, in a physical world.

Using the Vision Pro subtly changed how I think about my phone, iPad and Mac. I used to see them as objects that could display things on them, but now I think of them as more like little windows into the “information world” to give it a name, or maybe the “apple world.” And the apple Vision Pro gives you a full view into that world. Instead of my text messages being limited to the boundaries of a display i can hold, i can have them float in front of me wherever it makes sense. Either way, the messages “exist” like a physical object. When it’s pulled up on my phone, my phone “becomes” the object that “is” my messages window. So having messages pulled up on my phone and docked on my desk while writing a document on my Mac can be replicated by having the document in front of me with a bluetooth keyboard, and the messages window pulled up to my right. with the Vision Pro, It’s the same experience, but instead of there being any other objects there, it’s just me and the keyboard and the computer i’m wearing on my face.

The advantage this offers is that I can do this “anywhere.” Instead of being bound to my home office, I can sit out in the back yard and write, when the weather is nice. I can bring it to a hotel and always have a big enough screen with me to get some work done, or to really enjoy a movie. Because let me ask you something, why is it a trope for people to want to hang out in a hotel room and watch movies in bed? On that shitty tv, with its shitty speaker and the light glaring on the screen? Really? I’ve never enjoyed a movie on a hotel tv. I have tried many a time to enjoy that experience but it always falls short for me.

I feel like it makes sense why I would love watching movies in this thing, because it completely eliminates the problem of ambient light on the screen just by sheer physics. There’s nothing between your eyes and the displays, and the “light seal” seals out the light that would glare on the screen. And in the mixed view that i’m seeing, they dim the lighting in the room and make it match the color of the movie screen, which is really cool and subtly immersive.

The biggest difference between “the iPhone Moment” and the “vision pro” moment, is that in order to experience the “scrolling,” you have to put the thing on yourself. Everyone was able to just look and watch, and see as Steve scrolled on the iPhone for the first time in public view. But in order to have the same “wow” moment with the Vision Pro, you’re required to physically relocate your actual body in meat-space to an Apple Retail Store. Some people are just plain not willing to put that effort into trying to potentially experience something, that may convince them that they actually do want to spend three to four thousand dollars on this new gadget with all these limitations. It’s just not that kind of “big new thing.”

But it is another kind. It is a glimpse at the future of how apple sees us doing everything digital in our lives. They are basically betting everything on being able to get this experience to work inside of a lighter, less obtrusive hardware package, for a more digestible price.

The first version of what became the vision pro, was reportedly a pair of huge clunky stationary goggles you had to move your head into, it didn’t move at all. It had all these little stalk things that would be pointed towards your eyes, for the eye tracking. It had a ton of wires coming out of it, snaking behind a nearby wall, which hid the multiple computers it was connected to. But when you looked through it, it looked “real.” and it could track your gaze and respond to your eye movements. And that was the experience that they wanted to make.

They reached a point in the development where it was deemed necessary to ship a public-facing product before it was truly ready, in order to get the kind of feedback they needed to make the idea work in the long run. That’s what i see happening with the vision pro. It has an excellent foundation that WILL be at the heart of how we do everything in the future. But, for now, it only makes sense for enthusiasts, developers, and professionals. And that’s exactly who apple is targeting with this phase of the visionOS push.

This is something to pay attention to. It may not be obvious how this will change everything, but it will. Even if it’s not apple themselves who win the race, they will have contributed immensely, to the way the public perceives the potential of this kind of product.